wav Cello 1 # get the labels of the audio dataset head () fname label manually_verified 0 00044347.

read_csv ( 'path/to/freesound/train.csv' ) df_train. # get the labeled data from the `train.csv` fileĭf_train = pd.

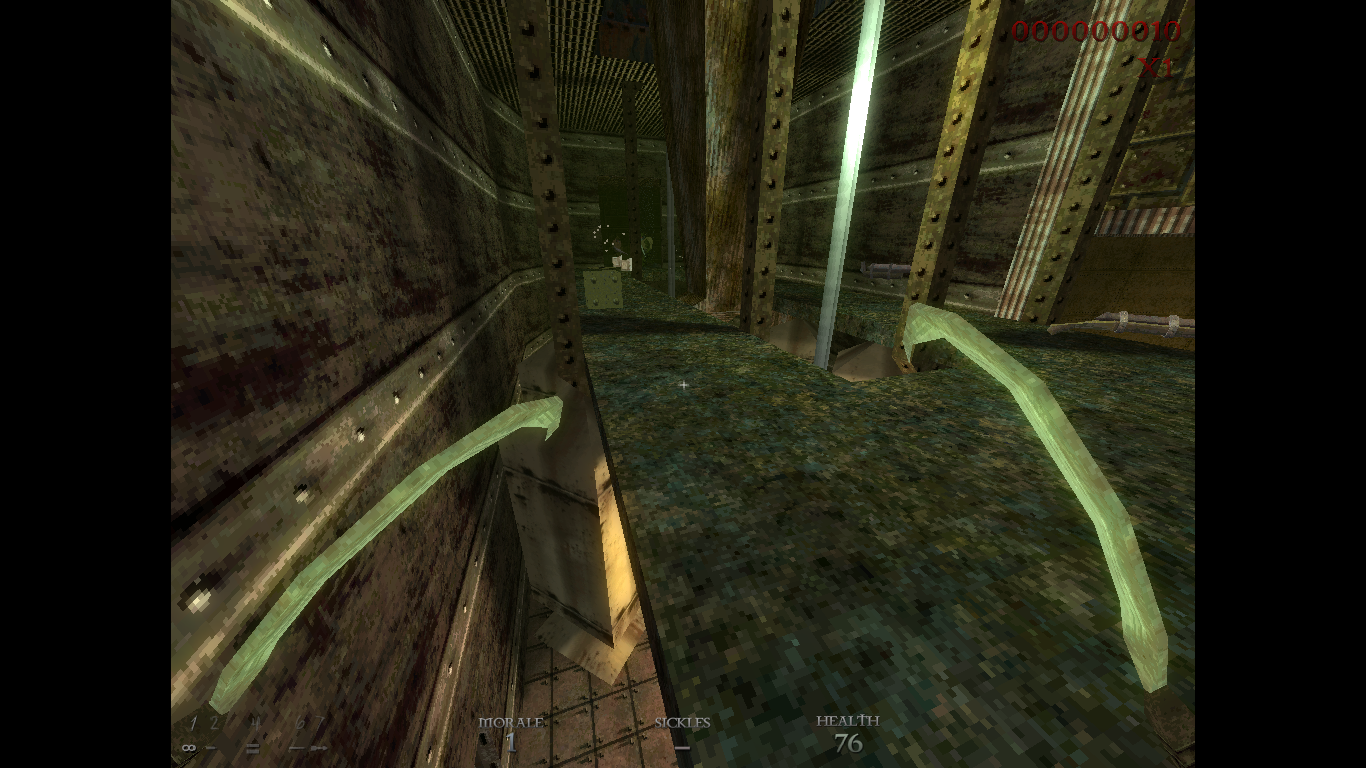

In our case, we need to store those images, unfortunate we have to plot them then store the plot. colorbar ( format = '%+02.0f dB' ) # Make the figure layout compactįor instance, the sounds of a Drawer that opens or closes looks like: title ( 'mel power spectrogram' ) # draw a color bar specshow ( log_S, sr = sr, x_axis = 'time', y_axis = 'mel' ) # Put a descriptive title on the plot # sample rate and hop length parameters are used to render the time axis figure ( figsize = ( 12, 4 )) # Display the spectrogram on a mel scale

We'll use the peak power (max) as reference. melspectrogram ( y, sr = sr, n_mels = 128 ) # Convert to log scale (dB). load ( audio_path, sr = None ) # Let's make and display a mel-scaled power (energy-squared) spectrogram The following snippet converts an audio into a spectrogram image: def plot_spectrogram ( audio_path ): y, sr = librosa. Another option will be to use matplotlib specgram(). We will be using the very handy python library librosa to generate the spectrogram images from these audio files.

These audio files are uncompressed PCM 16 bit, 44.1 kHz, mono audio files which make just perfect for a classification based on spectrogram. Once you downloaded this audio dataset, we can then start playing with Data PreProcessing "Acoustic_guitar", "Applause", "Bark", "Bass_drum", "Burping_or_eructation", "Bus", "Cello", "Chime", "Clarinet", "Computer_keyboard", "Cough", "Cowbell", "Double_bass", "Drawer_open_or_close", "Electric_piano", "Fart", "Finger_snapping", "Fireworks", "Flute", "Glockenspiel", "Gong", "Gunshot_or_gunfire", "Harmonica", "Hi-hat", "Keys_jangling", "Knock", "Laughter", "Meow", "Microwave_oven", "Oboe", "Saxophone", "Scissors", "Shatter", "Snare_drum", "Squeak", "Tambourine", "Tearing", "Telephone", "Trumpet", "Violin_or_fiddle", "Writing"

0 kommentar(er)

0 kommentar(er)